Understanding Intelligence

Alan Turing, in his 1950 paper “Computing Machinery and Intelligence,” proposed the following question: “Can machines do what we (as thinking entities) can do?” To answer it, he described his now famous test in which a human judge engages in a natural language conversation via a text interface with one human and one machine, each of which tries to appear human; if the judge cannot reliably tell which is which, then the machine is said to pass the test. The Turing Test bounds the domain of intelligence without defining what it is. We recognize intelligence by its results.

John McCarthy, who coined the term Artificial Intelligence in 1955, defined it as “the science and engineering of making intelligent machines.” A very straightforward definition, yet few terms have been more obfuscated by hype and extravagant claims, imbued with both hope and dread, or denounced as fantasy.

Over the succeeding decades, the term has been loosely applied and is now often used to refer to software that does not by anyone’s definition enable machines to “do what we (as thinking entities) can do.” The process by which this has come about is no mystery. A researcher formulates a theory about what intelligence or one of its key components is and attempts to implement it in software. “Humans are intelligent because we can employ logic” and so rule-based inference engines are developed. “We are intelligent because our brains are composed of neural networks” and so software neural networks are developed. “We are intelligent because we can reason even under uncertainly” and so programs implementing Bayesian statistics are created.

It doesn’t matter that none of these approaches ever got even to first base at passing the Turing Test, the term Artificial Intelligence was applied to them in the first place, and it stuck. Thus, the field of Artificial Intelligence has come into existence and still, the core concept of “intelligence” itself remains vague and nebulous, people have an intuitive notion that it is about consciousness, self-awareness, and autonomy. As it turns out, these intuitions, as with many such (heavier things fall faster than light ones and giant rocks don’t fall out of the sky), are wrong.

Going back to Turing, the cogency of his test rests upon the fact that we recognize intelligence when we see it or its results. We know when someone understands what we say to them and that is undeniable proof that intelligence is at work. Let’s step back and examine the process of language comprehension.

One person composes a message and sends it to another who processes it. We often talk about the meaning of words but, of course, they have no inherent meaning, they are randomly chosen symbols assigned to represent things in the world around us, or more properly, the ideas that exist in our minds of those things. The grammar of the message and the form of the words in it encode instructions on how the receiving party should make connections between the ideas corresponding to the words to recreate, or at least approximate, in the receiving mind, the meaning that the sending mind wished to convey.

Different languages have completely different vocabularies (word-symbol sets) and grammar varies greatly as well, but people can learn each other’s languages and translation is possible because humans all live in the same world and have corresponding ideas of the things that are experienced in it. Thus, any communication using symbols is dependent on corresponding sets of common referents for those symbols. These sets of common referents are our ideas and our knowledge about the world. Knowledge is not just a bag of random thoughts but an intricately connected structure that reflects many aspects of the external world that it evolved to comprehend, it is in fact a model, an internal model of the external world.

People’s world models do vary greatly but the momentous invention/discovery of language has endowed human beings with a means to transmit ideas from one to another, expanding, deepening, correcting, and enriching one another’s models.

It has often been suggested that humans cannot think without language and that language and intelligence are one and the same. It is certainly true that without language humans could not accumulate the knowledge that has resulted in civilization. Without our languages, humans would still be living in nature and it would even be harder than it is to define the essential difference between Homo sapiens and other closely related species.

It is likely that the capacity to construct ordered world models and language both depend upon a capability for symbolic processing, and it is that which lies at the root of the essential difference of humanity, but they are very distinct processes, and the model is a prerequisite for language and not the other way around. A human living alone separated from its kind without the opportunity to learn a language (the classic “raised by wolves” scenario) will still make alterations to its environment that would be impossible if it could not first have imagined them. Imagination too, requires the model as a necessary prerequisite.

That fact suggests a thought experiment that further clarifies the essence of intelligence. Imagine traveling through space and discovering a planet with structures on the surface created by an alien life form. Can we tell whether the species was intelligent by looking at the structures? If we see patterns in the structure that repeat in a way that indicates an algorithm at work then, no matter how monumental the structures, they are probably akin to beehives, ant hills, beaver dams, and bird nests, the result of instinctive repetitive behaviors. But if they reflect an individuality clearly based on considerations of utility, environment, and future events, they had to be imagined before they could be constructed and that means the builders had an internal world model. It could be a vastly alien intelligence that evolved under conditions so different from the world our model evolved to represent that we could never really communicate, but the essence of intelligence is the same and is unmistakable.

For now, the only example we have of an intelligent entity is ourselves and it is difficult to abstract the essence of intelligence from the experience of being an intelligent being. What about consciousness, self-awareness, and autonomy? Like intelligence, these things are essential to being a human being but are they one and the same?

The answer is that they may support one another but they are not the same. All these characteristics are “off-line” in a hypnotized person, yet that person can still process language, still access and update their world model. Consciousness is the experience of processing real-time data – other animals do that as well and we do not say they are intelligent because of it. Self-awareness is an examination of an entity’s model of itself. As with language and imagination, the capacity to build an internal model is a prerequisite for self-awareness but not the other way around.

Inseparable from consciousness and self-awareness, humans also experience desires and motivations. One motivation that seems particularly inseparable from the experience of being an intelligent being is the desire to use that intelligence to change the world. The perception that intelligence and the desire to employ it to alter the world are connected in human beings is correct.

Humans evolved intelligence, manual dexterity (hands), and the desire to use the first two to change and control their environment, in parallel. All three are a matched set that has everything to do with being a human being (intelligence and hands won’t help you survive if you don’t have the desire to use them) but they are three separate phenomena.

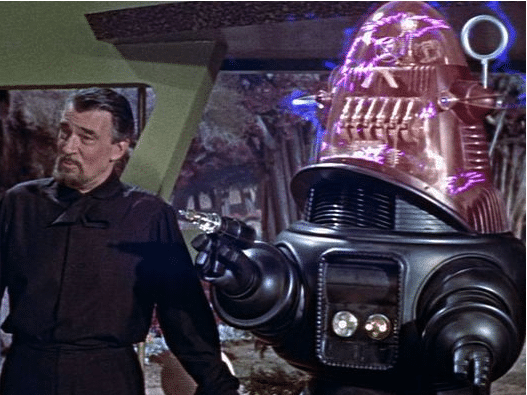

The association between intelligence and the motivation to alter our environment in humans has led to a common misconception about artificial intelligence. If it is possible to build an artificial intelligence that is as intelligent as a human, it will probably be possible to build one that is more intelligent than a human and such a superior AI could then build another superior to itself and so on. This is the so-called “singularity” popularized by Ray Kurzweil and others. People naturally feel that a vastly superior and powerful artificial intelligence must necessarily experience the desire to exercise its power, a fearful (for humans) prospect. But giving a robot hands does not automatically endow it with a desire to pick things up and throw them around and neither does endowing it with intelligence. Whatever motivations robots are given will be by the design of their builders and not some spontaneous or unintended result of its intelligence which its builders also give it.

It is now possible to define what intelligence is, not human intelligence, not alien intelligence, not artificial intelligence but the thing itself with some clarity and we can do this without the usual appeals to how humans experience the phenomena of being intelligent beings.

Intelligence is the process through which a computational information processor creates a model within processor memory of external phenomena (knowledge) of sufficient fidelity to predict the behavior of and/or control those phenomena.

The definition has both a qualitative and quantitative aspect. It is in the quantitative aspect that we can precisely relate the ancillary functions of language, imagination, consciousness, and self-awareness that are so closely related to it yet remain distinct from the core concept of model building. How much fidelity does the model have to have, how well must it predict, and how much control over the external world must it enable before we can properly call the system that processes it intelligent?

Albert Einstein is very property called a genius. Why? He created a model of the world, of the entire space-time continuum in fact, of such unprecedented fidelity that humankind’s ability to predict and control the external world was moved forward by a quantum leap. Now, what if Einstein had been the hypothetical “raised by wolves” person described earlier, without language or culture to support the development of his internal model? Maybe he would have invented a better way to chip flint but who could he tell about it?

Functional intelligence requires accumulated knowledge and that requires language. The capacity to monitor and predict the behavior of our external environment requires real-time analysis of incoming information that can be related to our stored model and that requires consciousness. Control of our environment requires that we are self-motivated and that is autonomy which implies goals that need to be pursued in order to maximize internal utility functions, a process that is meaningless without self-consciousness.

Will artificial intelligence have all these characteristics? Yes, but they won’t spontaneously appear as the result of reaching some level of complexity or a by-product of some master yet undiscovered algorithm. Each will have to be purposely designed and integrated into the core processes that support its intelligence; and the capacity to build a high-fidelity world model